Volume 23, Issue 4

Understanding the Harm Teens Experience on Social Media

Arturo Béjar

A systematic approach to mitigating negative experiences online

The current approach to online safety, focusing on objectively harmful content and deletion or downranking, is necessary but not sufficient, as it addresses only a small fraction of the harm that teens experience. In order to understand harm, it is essential to understand it from their perspective by surveying and creating safety tools and reporting that make it easy to capture what happens and provide immediate help. Many of the recommendations in this article come from what you learn when you analyze behavioral correlates: that you need approaches that rely on conduct in context, better personalization, and providing feedback to actors.

Privacy and Rights

Unsolved Problems in MLOps

Niall Murphy, Todd Underwood

Either find a better paradigm or fix the ones we're using now.

The excitement with AI is carrying us along in a big wave, but the practitioners whose job it is to make this all work are scrambling behind the scenes, often more in dread than excitement. In some cases they are using outdated techniques, In others, approaches that only work for now. However, we should be casting about for either a better paradigm or a better patching-up of the existing paradigms.

AI

Guardians of the Agents

Erik Meijer

Formal verification of AI workflows

To mitigate against models going off the rails during inference, people often use so-called guardrails to dynamically monitor, filter, and control model responses for problematic content. Guardrails, however, come with their own set of problems such as false positives caused by pattern matching against a fixed set of forbidden words. This mathematical proof-based approach addresses these limitations by providing deterministic and verifiable assurances of safety without the need to trust the AI nor any of the artifacts it produces.

AI

Moving Faster by Not Breaking Things

Justin Sheehy, Jonathan Reed

Initial investments allow for a fearless approach to pushing changes.

An engineering team that can move without fear, knowing that they have made themselves safe to do so, can ship more often and more quickly and make more dramatic changes without hesitation. This feels great to individual engineers and enables those engineers to be more effective for the business they work in. A bit of investment in safety pays huge dividends in speed as well as by reducing the frequency and severity of change-triggered incidents.

Development

Operations and Life

No One Has Time to Work on Your Project

Strata Chalup (Standing in for Thomas A. Limoncelli)

How to work effectively with overwhelmed people to get things done

What if you could apply a few basic principles that would help make working on your project seem more attractive and worthwhile to people? Success in these matters boils down to a few basic principles and assumptions that seem obvious and unremarkable. What makes them effective is when you manage to combine all of them and apply them consistently.

Business and Management,

Operations and Life

Kode Vicious

The Process

From start to finish

While the Scientific Method gives us a way to evaluate a hypothesis, a Scientific Process allows us to organize our minds to form these hypotheses, lay out a piece of code, organize a project, or debug a program. It's how we get to the point of focusing enough to solve the incredibly challenging problems we've set for ourselves.

Development,

Kode Vicious

Volume 23, Issue 3

Special Issue on WebAssembly

WebAssembly: Yes, but for What?

Andy Wingo

The keys to a successful Wasm deployment

The keys to a successful Wasm deployment

WebAssembly (Wasm) has found a niche but not yet filled its habitable space. What is it that makes for a successful deployment? WebAssembly turns 10 this year, but in the words of William Gibson, we are now as ever in the unevenly distributed future. Here, we look at early Wasm wins and losses, identify winning patterns, and extract commonalities between these patterns. From those, we predict the future, suggesting new areas where Wasm will find purchase in the next two to three years.

Web Development

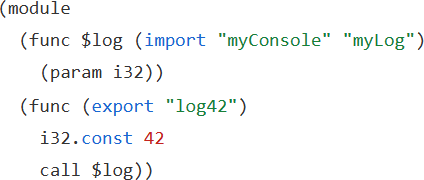

WebAssembly: How Low Can a Bytecode Go?

Ben Titzer

New performance and capabilities

Wasm is still growing with new features to address performance gaps as well as recurring pain points for both languages and embedders. Wasm has a wide set of use cases outside of the web, with applications from cloud/edge computing to embedded and cyber-physical systems, databases, application plug-in systems, and more. With a completely open and rigorous specification, it has unlocked a plethora of exciting new systems that use Wasm to bring programmability large and small. With many languages and many targets, Wasm could one day become the universal execution format for compiled applications.

Wasm is still growing with new features to address performance gaps as well as recurring pain points for both languages and embedders. Wasm has a wide set of use cases outside of the web, with applications from cloud/edge computing to embedded and cyber-physical systems, databases, application plug-in systems, and more. With a completely open and rigorous specification, it has unlocked a plethora of exciting new systems that use Wasm to bring programmability large and small. With many languages and many targets, Wasm could one day become the universal execution format for compiled applications.

Web Development

When Is WebAssembly Going to Get DOM Support?

Daniel Ehrenberg

Or, how I learned to stop worrying and love glue code

What should be relevant for working software developers is not, "Can I write pure Wasm and have direct access to the DOM while avoiding touching any JavaScript ever?" Instead, the question should be, "Can I build my C#/Go/Python library/app into my website so it runs with good performance?" Nobody is going to want to write that bytecode directly, even if some utilities are added to make it easier to access the DOM. WebAssembly should ideally be an implementation detail that developers don't have to think about. While this isn't quite the case today, the thesis of Wasm is, and must be, that it's okay to have a build step.

What should be relevant for working software developers is not, "Can I write pure Wasm and have direct access to the DOM while avoiding touching any JavaScript ever?" Instead, the question should be, "Can I build my C#/Go/Python library/app into my website so it runs with good performance?" Nobody is going to want to write that bytecode directly, even if some utilities are added to make it easier to access the DOM. WebAssembly should ideally be an implementation detail that developers don't have to think about. While this isn't quite the case today, the thesis of Wasm is, and must be, that it's okay to have a build step.

Web Development

Concurrency in WebAssembly

Conrad Watt

Experiments in the web and beyond

Mismatches between the interfaces promised to programmers by source languages and the capabilities of the underlying web platform are a constant trap in compiling to Wasm. Even simple examples such as a C program using the language's native file-system API present difficulties. Often such gaps can be papered over by the compilation toolchain somewhat automatically, without the developer needing to know all of the details so long as their code runs correctly end to end. This state of affairs is strained to its limits when compiling programs for the web that use multicore concurrency features. This article aims to describe how concurrent programs are compiled to Wasm today given the unique limitations that the Web operates under with respect to multi-core concurrency support and also to highlight some of the current discussions of standards that are taking place around further expanding Wasm's concurrency capabilities.

Mismatches between the interfaces promised to programmers by source languages and the capabilities of the underlying web platform are a constant trap in compiling to Wasm. Even simple examples such as a C program using the language's native file-system API present difficulties. Often such gaps can be papered over by the compilation toolchain somewhat automatically, without the developer needing to know all of the details so long as their code runs correctly end to end. This state of affairs is strained to its limits when compiling programs for the web that use multicore concurrency features. This article aims to describe how concurrent programs are compiled to Wasm today given the unique limitations that the Web operates under with respect to multi-core concurrency support and also to highlight some of the current discussions of standards that are taking place around further expanding Wasm's concurrency capabilities.

Web Development

Unleashing the Power of End-User Programmable AI

Erik Meijer

Creating an AI-first program Synthesis framework

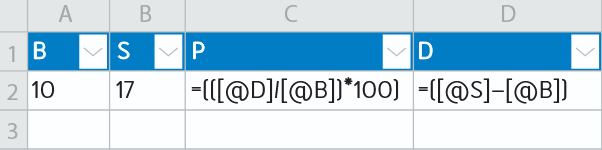

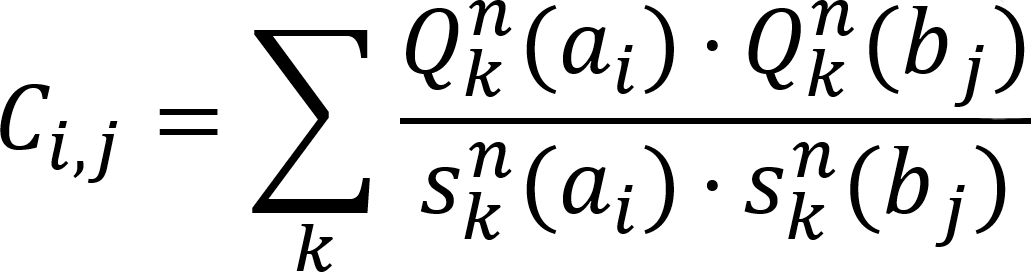

As a demonstration of what can be accomplished with contemporary LLMs, this paper outlines the high-level design of an AI-first, program-synthesis framework built around a new programming language, Universalis, designed for knowledge workers to read, optimized for our neural computer to execute, and ready to be analyzed and manipulated by an accompanying set of tools. We call the language Universalis in honor of Gottfried Wilhelm Leibniz. Leibniz's centuries-old program of a universal science for coordinating all human knowledge into a systematic whole comprises two parts: (1) a universal notation by use of which any item of information whatsoever can be recorded naturally and systematically, and (2) a means of manipulating the knowledge thus recorded in a computational fashion, to reveal its logical interrelations and consequences. Exactly what current day LLMs provide!

As a demonstration of what can be accomplished with contemporary LLMs, this paper outlines the high-level design of an AI-first, program-synthesis framework built around a new programming language, Universalis, designed for knowledge workers to read, optimized for our neural computer to execute, and ready to be analyzed and manipulated by an accompanying set of tools. We call the language Universalis in honor of Gottfried Wilhelm Leibniz. Leibniz's centuries-old program of a universal science for coordinating all human knowledge into a systematic whole comprises two parts: (1) a universal notation by use of which any item of information whatsoever can be recorded naturally and systematically, and (2) a means of manipulating the knowledge thus recorded in a computational fashion, to reveal its logical interrelations and consequences. Exactly what current day LLMs provide!

AI

Bridging the Moat:

Security Is Part of Every Critical User Journey

Phil Vachon

How else would you make sure that product security decisions serve your customers?

How else would you make sure that product security decisions serve your customers?

Next time you're working on a new product or feature or the next time you're yawning your way through a product development meeting, raise your hand and propose that security outcomes and risks be defined at each step along critical user journeys. Whether you're building an integration between enterprise systems, a user-facing application, or a platform meant to save your customers complexity and money, putting security at the forefront of the product team's challenge will be transformative.

Bridging the Moat,

Security

Kode Vicious

In Search of Quietude

Learning to say no to interruption

KV is old enough to remember a time before ubiquitous cell phones, a world in which email was the predominant form of intra- and interoffice communication, and it was perfectly normal not to read your email for hours in order to concentrate on a task. Of course, back then we also worked in offices where co-workers would readily walk in unannounced to interrupt us. That too, was annoying but could easily be deterred through the clever use of headphones.

KV is old enough to remember a time before ubiquitous cell phones, a world in which email was the predominant form of intra- and interoffice communication, and it was perfectly normal not to read your email for hours in order to concentrate on a task. Of course, back then we also worked in offices where co-workers would readily walk in unannounced to interrupt us. That too, was annoying but could easily be deterred through the clever use of headphones.

Business/Management,

Development,

Kode Vicious

Volume 23, Issue 2

AI: It's All About Inference Now

Michael Gschwind

Model inference has become the critical driver for model performance.

As the scaling of pretraining is reaching a plateau of diminishing returns, model inference is quickly becoming an important driver for model performance. Today, test-time compute scaling offers a new, exciting avenue to increase model performance beyond what can be achieved with training, and test-time compute techniques cover a fertile area for many more breakthroughs in AI. Innovations using ensemble methods, iterative refinement, repeated sampling, retrieval augmentation, chain-of-thought reasoning, search, and agentic ensembles are already yielding improvements in model quality performance and offer additional opportunities for future growth.

As the scaling of pretraining is reaching a plateau of diminishing returns, model inference is quickly becoming an important driver for model performance. Today, test-time compute scaling offers a new, exciting avenue to increase model performance beyond what can be achieved with training, and test-time compute techniques cover a fertile area for many more breakthroughs in AI. Innovations using ensemble methods, iterative refinement, repeated sampling, retrieval augmentation, chain-of-thought reasoning, search, and agentic ensembles are already yielding improvements in model quality performance and offer additional opportunities for future growth.

AI

Develop, Deploy, Operate

Titus Winters, Leah Rivers, and Salim Virji

A holistic model for understanding the costs and value of software development

By taking a holistic view of the commercial software-development process, we have identified tensions between various factors and where changes in one phase, or to infrastructure, affect other phases.

We have distinguished four distinct forms of impact, warned against measuring against unknown counterfactuals, and suggested a consensus mechanism for estimating DDR (defect detection and resolution) costs.

Our approach balances product outcomes and the strategic need for change with both the human and machine costs of producing valuable software.

With this model, the process of commercial software development could become more comprehensible across roles and levels and therefore more easily improved within an organization.

By taking a holistic view of the commercial software-development process, we have identified tensions between various factors and where changes in one phase, or to infrastructure, affect other phases.

We have distinguished four distinct forms of impact, warned against measuring against unknown counterfactuals, and suggested a consensus mechanism for estimating DDR (defect detection and resolution) costs.

Our approach balances product outcomes and the strategic need for change with both the human and machine costs of producing valuable software.

With this model, the process of commercial software development could become more comprehensible across roles and levels and therefore more easily improved within an organization.

Business/Management,

Development

Generative AI at the Edge: Challenges and Opportunities

Vijay Janapa Reddi

The next phase in AI deployment

Generative AI at the edge is the next phase in AI's deployment.

By tackling the technical hurdles and establishing new frameworks, we can ensure this transition is successful and beneficial.

The coming years will likely see embodied, federated, and cooperative small models become commonplace, quietly working to enhance our lives in the background, much as embedded microcontrollers did in the previous tech generation.

The difference is, these models won't just compute; they will communicate, create, and adapt.

Generative AI at the edge is the next phase in AI's deployment.

By tackling the technical hurdles and establishing new frameworks, we can ensure this transition is successful and beneficial.

The coming years will likely see embodied, federated, and cooperative small models become commonplace, quietly working to enhance our lives in the background, much as embedded microcontrollers did in the previous tech generation.

The difference is, these models won't just compute; they will communicate, create, and adapt.

AI

Research for Practice

The Point is Addressing

Daniel Bittman with introduction by Peter Alvaro

A brief tour of efforts to reimagine programming in a world of changing memories

Even something as innocent as addressing comes from a rich design space filled with tradeoffs between important considerations such as scaling, transparency, overhead, and programmer control. These tradeoffs are just some of the examples of the many challenges facing programmers today, especially as we drive our applications to larger scales. The way we refer to and address data matters, with reasons ranging from speed to complexity to consistency, and can have unexpected effects down the line if we do not carefully consider how we talk about and refer to data at large.

Memory,

Research for Practice

Drill Bits

Sandboxing: Foolproof Boundaries vs. Unbounded Foolishness

Terence Kelly with Special Guest Borer Edison Fuh

Sandboxing mitigates the risks of software so large and complex that it's likely to harbor security vulnerabilities. To safely harness useful yet ominously opaque libraries, a simple mechanism provides ironclad confinement—or does it?

Code,

Development,

Drill Bits,

Security

Kode Vicious

Can't We Have Nice Things?

Careful crafting and the longevity of code

We build apparatus in order to show some effect we're trying to discover or measure. A good example is Faraday's motor experiment, which showed the interaction between electricity and magnetism. The apparatus has several components, but the main feature is that it makes visible an invisible force: electromagnetism. Faraday clearly had a hypothesis about the interaction between electricity and magnetism, and all science starts from a hypothesis. The next step was to show, through experiment, an effect that proved or disproved the hypothesis. This is how empiricists operate. They have a hunch, build an apparatus, run an experiment, refine the hunch, and then wash, rinse, and repeat.

We build apparatus in order to show some effect we're trying to discover or measure. A good example is Faraday's motor experiment, which showed the interaction between electricity and magnetism. The apparatus has several components, but the main feature is that it makes visible an invisible force: electromagnetism. Faraday clearly had a hypothesis about the interaction between electricity and magnetism, and all science starts from a hypothesis. The next step was to show, through experiment, an effect that proved or disproved the hypothesis. This is how empiricists operate. They have a hunch, build an apparatus, run an experiment, refine the hunch, and then wash, rinse, and repeat.

Code,

Development,

Kode Vicious

The Soft Side of Software

Peer Mentoring

Kate Matsudaira

My favorite growth hack for engineers and leaders

Stop waiting for a senior mentor to appear. Your peers are some of the most valuable mentors you'll ever find. Start leveraging those relationships, sharing insights, and bringing value to every conversation. Your career will thank you for it.

Business/Management,

The Soft Side of Software